- Lavelle Road, Bangalore

- contact@basofa.com

Large Language Models (LLMs) have been in the headlines for their impressive capabilities in natural language processing. However, their massive size and their requirements for huge resources have limited their accessibility and application. Enter the Small Language Model (SLM), a small and efficient alternative with diverse use cases.

SLMs are the smaller versions of the LLMs. They have significantly fewer parameters, usually ranging from a few million to a few billion, whereas a LLM will have hundreds of billions or even trillions.

This difference in size translates to several advantages like:

1. Efficiency: SLMs require less computational power and memory, making them suitable for deployment on smaller devices or even edge computing scenarios. This opens up opportunities for real-world applications like on-device chatbots and personalized mobile assistants.

2. Accessibility: With lower resource requirements, SLMs are more accessible to a broader range of developers and organizations. This increases the reach of AI, allowing smaller teams and individual researchers to explore the power of language models without significant infrastructure investments.

3. Customization: SLMs are easier to fine-tune for specific domains and tasks. This enables the creation of specialized models tailored to niche applications, leading to higher performance and accuracy.

Phi Models are a family of open AI models developed by Microsoft. These models are highly capable and cost-effective small language models, outperforming models of the same size and the next size up across a variety of language, reasoning, coding and math benchmarks.

These are available on Microsoft Azure AI Studio, Hugging Face and Ollama.

Use Case

This blog post shows how the D365 Store Commerce POS interacts with SLM AI Models hosted locally on POS Registers.

Why run locally?

1. Data Privacy – Everything stays on your machine

2. Low Latency – No internet round-trips yields faster responses

3. Offline Mode – Consuming intelligence even without internet

4. Custom Control – Update and configure models to fit your needs

Prerequisites

1. Dynamics 365 Store Commerce App

2. Hardware Station configured

3. Ollama installed

4. Phi3:mini model pulled via Ollama

Instructions

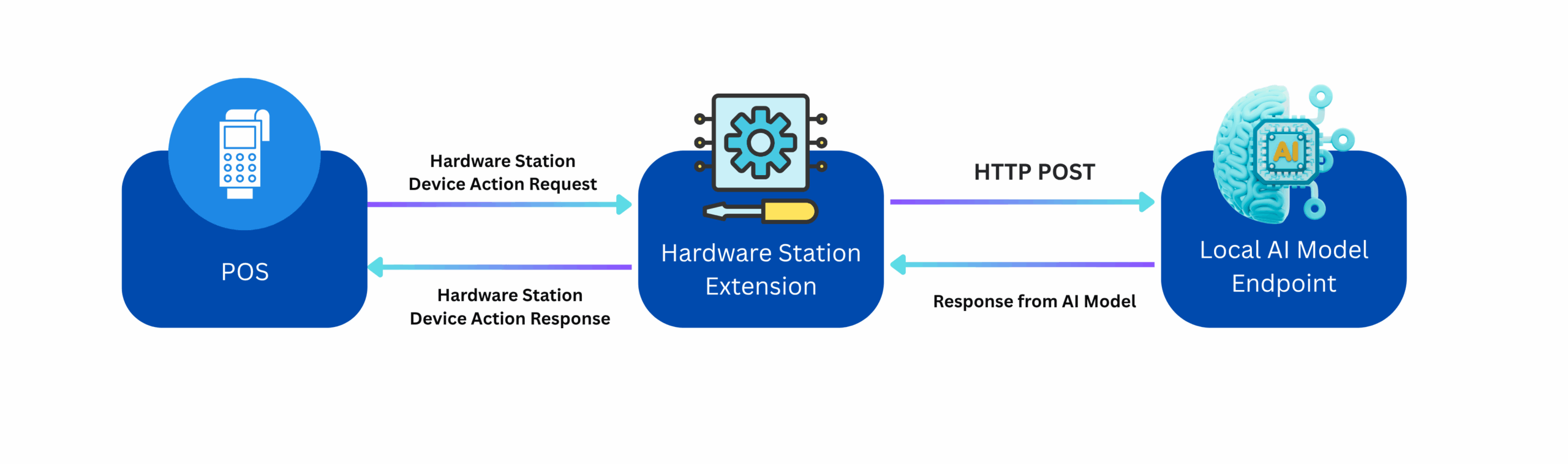

High level Solution Design

1. Install Ollama

Download from: https://ollama.com/download

2. Download the Phi3 AI SLM model

Open PowerShell in Admin Mode, Run below command:

ollama pull phi3:mini

3. Load and Run the Model

Run below command in powershell

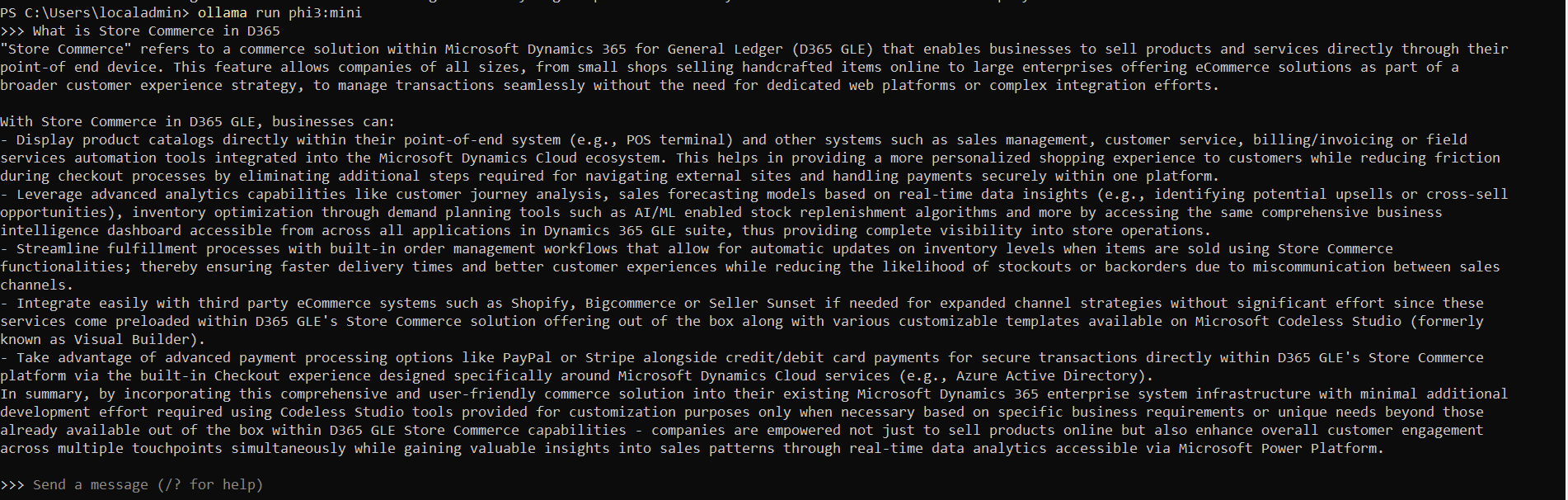

ollama run phi3:mini

When we run this command, we can directly ask Phi3:mini model like a chatbot.

4. Test Phi3 AI Model by API call using PowerShell

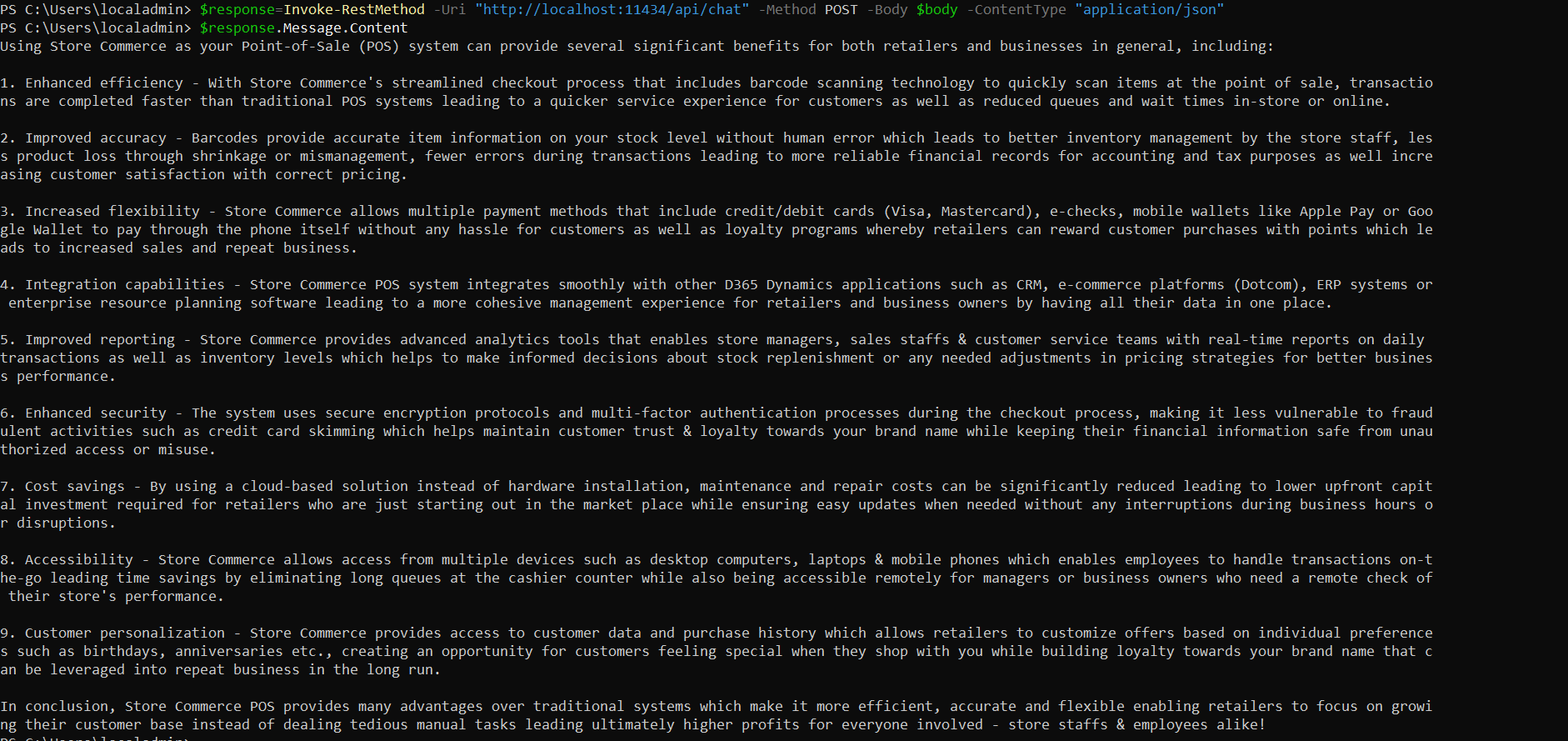

Here instead of directly asking the model, we are calling an endpoint which is running locally, just like an API.

Run below three commands in powershell

$body=@{

>> model="phi3:mini"

>> stream=$false

>> messages=@(

>> @{

>> role="system"

>> content="You are a helpful D365 Commerce assistant."

>> },

>> @{

>> role="user"

>> content="What are the advantages of Store Commerce POS"

>> }

>> )

>> } | ConvertTo-Json -Depth 10 $response=Invoke-RestMethod -Uri "http://localhost:11434/api/chat" -Method POST -Body $body -ContentType "application/json" $response.Message.ContentSample Output:

Hardware Station Integration

Now integrate Store Commerce with the local model using a Hardware Station extension.

Hardware Station controller sample:

[RoutePrefix("LocalAIModel")]

public class LocalAIModelController : IController

{

[HttpPost]

public async Task<string> SendMessage(LocalAIModelRequest request)

{

string apiUrl = "http://localhost:11434/api/chat";

try

{

string context = @"{

""SalesOrder"": {

""SalesId"":""013106""

""CustomerId"": ""004007"",

""CustomerName"": ""Owen Tolley"",

""TotalAmountInclusiveOfAllTaxes"": 430.24,

""TaxAmount"": 25.31,

""AmountDue"": 0,

""AmountPaid"": 0,

""SalesLines"":[

{

""LineNumber"":1,

""ItemName"":""Fashion Sunglasses"",

""Quantity"":1.0,

""Price"":404.93

}

]

""UnifiedDeliveryInformation"": {

""DeliveryMode"": ""60"",

""DeliveryDate"": ""2025-06-15T00:00:00-05:00"",

""DeliveryAddress"": {

""Name"": ""Houston Store"",

""FullAddress"": ""5015 Westheimer Rd\nHouston, TX 77056 \nUSA"",

""City"": ""Houston"",

""State"": ""TX"",

""ZipCode"": ""77056"",

""ThreeLetterISORegionName"": ""USA""

}

}

}

}";

var requestBody = new

{

model = "phi3:mini",

stream = false,

messages = new[]

{

new { role = "system", content = "Use the provided data to answer the user's question." },

new { role = "user", content = $"{context}\n\nQuestion:{request.Message}" }

}

};

string jsonBody = Newtonsoft.Json.JsonConvert.SerializeObject(requestBody);

using (var httpClient = new HttpClient())

using (var content = new StringContent(jsonBody, Encoding.UTF8, "application/json"))

{

var response = await httpClient.PostAsync(apiUrl, content).ConfigureAwait(false);

if (response.IsSuccessStatusCode)

{

string responseJson = await response.Content.ReadAsStringAsync().ConfigureAwait(false);

var result = Newtonsoft.Json.JsonConvert.DeserializeObject<OllamaResponse>(responseJson);

return result.message.content;

}

else

{

return "Error occured while calling ollama api." + response.StatusCode;

}

}

}

catch (OperationCanceledException ocex)

{

return $"Error: Request timed out: {ocex.Message}";

}

catch (Exception ex)

{

return $"Error: {ex.Message}";

}

}

}POS Code to Call Hardware Station Extension

private button1ClickHandler(): void {

let questionTxt: string = "";

this.questionText = document.getElementById("questionText") as HTMLTextAreaElement;

questionTxt = this.questionText.value;

let aiRequest: { Message: string } = { Message: questionTxt, };

let answerSpan=document.getElementById("answerArea") as HTMLSpanElement;

let localAIModelHWSRequest = new HardwareStationDeviceActionRequest("LocalAIModel", "SendMessage", aiRequest);

this.isProcessing=true;

this.context.runtime.executeAsync(localAIModelHWSRequest).

then((airesponse) => {

if (airesponse.data.response)

{

answerSpan.innerText = airesponse.data.response;

this.isProcessing=false;

}

})

.catch((reason) => { console.error("Error executing AI model:", reason); });

}

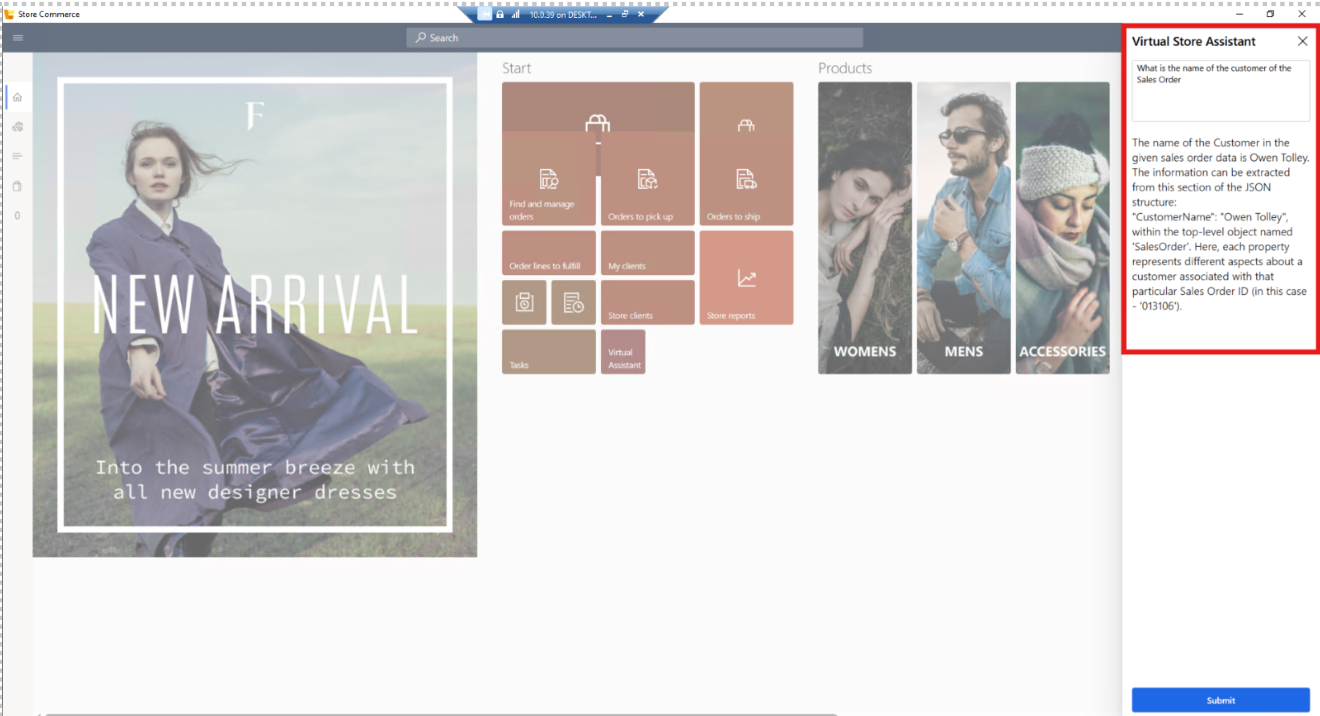

This creates a Dialog box for users to ask questions. Instead of asking generic questions, we provide it with static Sales Order data and prompt it to answer based on that.

The system takes the question from the text box, sends it to the local AI model through the Hardware Station extension, and displays the result in the dialog box.

The model successfully answers the question based on the provided data.

Coming Soon

Part 2: Using function calling to let the SLM model call APIs based on user prompts. For example: fetch a specific order or check product inventory.

References

1.https://medium.com/@nageshmashette32/small-language-models-slms-305597c9edf2

2.https://azure.microsoft.com/en-us/blog/introducing-phi-3-redefining-whats-possible-with-slms/