- Lewes, Delaware

- contact@basofa.com

While AI has emerged as a transformative force, using it in applications demands substantial resources and a hefty investment. However, Azure OpenAI Service is changing this market scape.

With Azure AI services, businesses can access advanced models of AI and technologies seamlessly without having to invest in expensive resources. More importantly, the platform meets all essential requirements for enterprise security, compliance, and availability – making AI deployment secure and reliable.

Read on to learn more about Azure OpenAI Service and how it supports secure and compliant AI development for enterprise customers.

Understanding Azure OpenAI

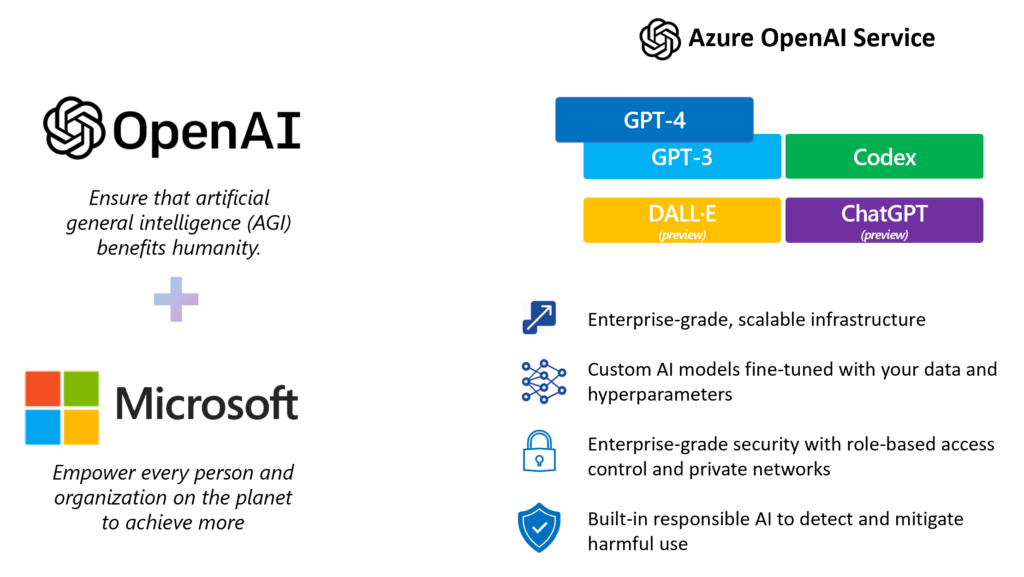

Azure Open AI is a platform that provides pre-trained AI models and APIs for natural language processing tasks. It offers the right resources and tools to efficiently and quickly create intelligent applications.

With this Service, you can tap into AI models, such as GPT, to perform various tasks, such as sentiment analysis, text generation, and language translation. It further makes creating everything from chatbots to powerful analytical tools possible. It even offers access to Azure OpenAI ChatGPT.

By providing OpenAI’s models as a cloud-based service, Microsoft removes barriers to entry that have traditionally made AI inaccessible to many enterprises. Microsoft’s democratization of AI has allowed businesses of all sizes and industries to use AI in their operations. These include AI tools like Dynamics 365 CoPilot, Dynamics 365 Generative AI, and D365 Generative AI Reporting.

Enterprises can create and deploy AI applications with enterprise-class SLAs and security, all thanks to Azure OpenAI. At the same time, the services support compliance with various regulations, such as data privacy laws.

Security, Compliance, and Privacy in Azure OpenAI Service

Data security and compliance are vital considerations when deploying AI services. Azure OpenAI Service follows robust security features and strictly adheres to industry standards.

1. Ensuring Data Security in AI Solutions

Azure OpenAI service has set in place various security measures to protect data and ensure privacy. Some of the notable measures for securing data in Azure OpenAI projects are as follows:

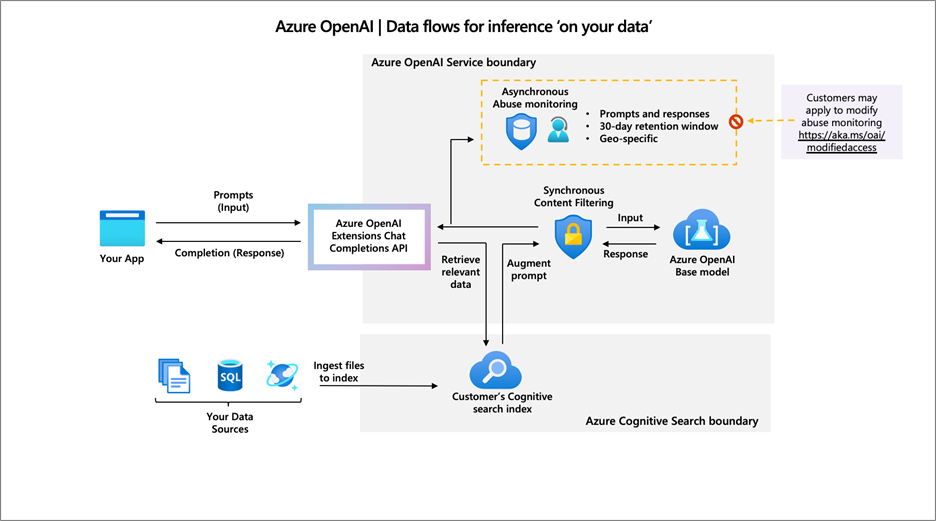

a. Microsoft stores completion data and prompts for around 30 days to review against abuse. It is stored in the same area where the OpenAI service has been deployed, encrypted at rest, and logically isolated with Azure OpenAI API credentials and Azure subscriptions.

b. The Service employs encryption, such as FIPS 140-2 compliant 256-bit AES encryption, as a default data protection measure to ensure that data is transmitted securely between users and Services like Azure OpenAI ChatGPT.

c. Microsoft also ensures that its AI services adhere to six principles of responsible AI. These include:

• Fairness: Ensures that AI systems are not discriminatory against anyone.

• Reliability and Safety: Ensure that AI systems are reliable and safe.

• Privacy and Security: Protects user data and follows robust security measures.

• Inclusiveness: Design AI systems that are beneficial and accessible to all.

• Accountability: Holds individuals and enterprises accountable for using AI systems.

• Transparency: Offers clear information on how AI systems work.

2. Regulatory Compliance in AI Development

Azure has specific certifications which help validate its commitment to regular compliance.

• The General Data Protection Regulation (GDPR) is a regulation in the European Union that aims to protect its citizens’ personal data and privacy. It ensures that user data in OpenAI is handled securely and complies with regulation principles.

• ISO 270001 is an internationally recognized standard for information security management systems. With this certification, the Service shows its dedication to handling security risks related to information.

• Azure OpenAI Service complies with Health Insurance Portability and Accountability Act (HIPAA) regulations. This helps to ensure that healthcare companies secure sensitive patient data when using Azure OpenAI models and face no legal recursions.

By implementing these compliance standards, Microsoft Azure OpenAI Service assures enterprises that their data is handled carefully and complies with relevant regulations.

3. Access Control and Privacy Measures

In addition to security and compliance, Azure Open AI maintains privacy, which involves how user data is handled regarding access and usage. The platform implements access controls via Azure Active Directory, including role-based and conditional access controls.

This enables enterprises to ensure that only authorized individuals can access their Azure OpenAI models, reducing the risk of data breaches.

Azure Open AI privacy protocol further safeguards user-provided data, such as prompts and responses, as well as the embeddings and architecture of the AI model, from access by other entities or users. This data is also not used to enhance Azure OpenAI studio, products, or other services. It also does not contribute to the improvement of Microsoft or external offerings.

With that being said, OpenAI Azure also maintains ethical and responsible AI use by vigilant monitoring to pinpoint and prevent the generation of offensive, abusive, and harmful content through the implementation of advanced algorithms.

These algorithms continuously examine AI-generated content, identifying patterns that indicate violation or harm. Based on this analysis, the platform takes preventive measures to avoid creating and propagating such content, ensuring the ethical application of AI in enterprises.

The Bottom Line

Azure OpenAI service offers the best of both worlds—it provides top-notch AI technology in a secure and compliant framework. This gives you the technological edge and the peace of mind of using secure AI models.

Although Microsoft ensures the security and privacy of the Azure OpenAI service, it is also your responsibility to secure your end of the interaction. By setting appropriate access controls and protecting Azure credentials, you can support Microsoft and ensure you stay secure, compliant, and responsible.

Frequently Asked Questions

1. Does Microsoft use your data to train models?

No, Microsoft does not use your data to train or enhance Microsoft products or services. Additionally, OpenAI Azure offers isolated network security, separating each client’s applications and data to prevent cross-contamination.

2. Does Azure allow you to keep your data within specific regions?

Yes, Azure’s data residency abilities enable you to specify the geographic area where you will process and store your data. This is particularly beneficial for companies that comply with government standards, such as CMMC, which mandates that the data be kept within US boundaries.

3. How do content filters ensure data security in Azure OpenAI Service?

Azure OpenAI Services content filters help maintain data security by categorizing risk content like hate speech or violence. They also assist in legal compliance, ensure user safety, and maintain data integrity. Furthermore, these filters can be customized, allowing you to adjust them according to your needs and internal policies.